Presentation at SNIA Storage Developers Conference, Santa Clara, CA, Sept. 16-18, 2024

At SDC 2023 last year, we presented Homa, a new datacenter protocol invented by John Ousterhout at Stanford University. Overall, Homa provides significant advantages over TCP when it comes to tail latency and infrastructure efficiency in real-life networks, but not compatible with TCP API. We were told to come back with answers regarding Homa:

A) Can Homa and TCP co-exist in the same network peacefully?

B) How can Homa be accelerated to become more useful and applicable for networked storage?

In our presentation this year we will provide those answers and new collaboration results.

First, we will show network simulation analysis for traffic comprising Homa and TCP. Because of TCP’s congestion management, both protocols not only can co-exist but also be complemented in order to get the best from both: TCP for data streams plus Homa for (shorter) messages with improved tail-end latencies.

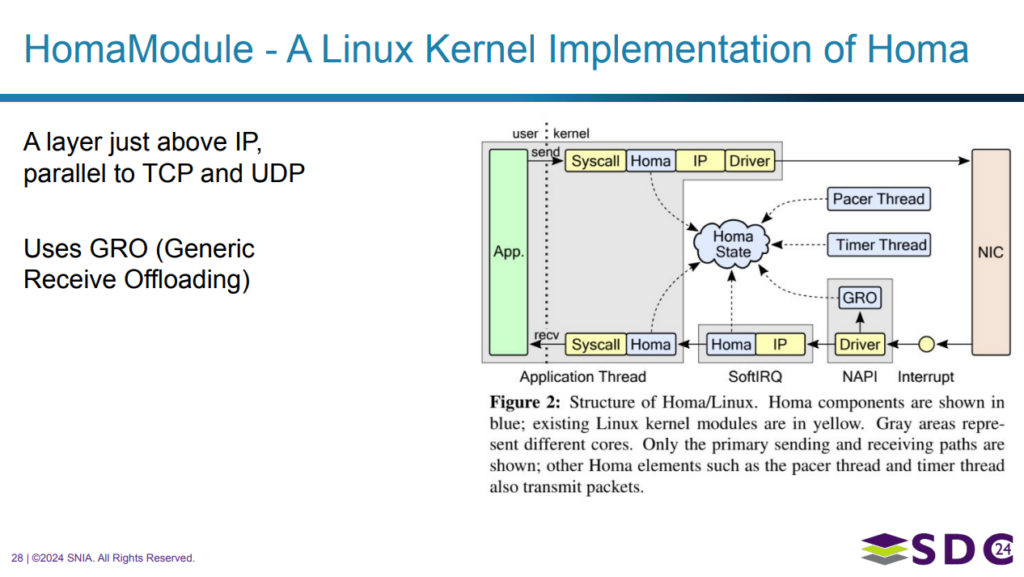

We then look into John’s open source implementation for the Linux Kernel, HomaModule and how this can be combined with another open source project, FPGA NIC Corundum.io, for FPGA acceleration of the Homa protocol.

We present experimental results by applying known concepts of CPU offloading to Homa, especially receive side scaling (RSS), segmentation and large receive offloading. After applying the offloads to a traffic pattern with significant traffic portion of payload chunks, the MTU (maximum transmission unit), both the slowdown and RTT, decreases by 5x. The outcome is a Reliable, Rapid, Request-Response Protocol with benefits in storage networking and potential use for networking GPU clusters.