PCI Express (PCIe) Connectivity

ASIC / FPGA PCIe IP Solutions

The Peripheral Component Interconnect Express (PCIe) standard currently in its sixth generation is an I/O interconnect technology defined by PCI-SIG. It is a layer based protocol that for software is fully backwards compatible to the PCI Local Bus standard which is replaced by PCIe.

With the combination of cost-efficient high-speed serial IO and PCIe hard-IP blocks FPGAs can be connected to CPUs or GPUs or System-on-Chips (SoC) – or to other FPGAs to form a high-bandwidth, low-latency network in various topologies by using FPGA PCIe tunnels.

To de-risk PCIe, MLE offers various hardware/software subsystems comprising open source Linux device drivers and pre-integrated FPGA reference designs, including PCIe SSD Host Controller, Linux PCIe Stream, PCIe Non-Transparent Bridging (PCIe NTB), and PCIe Long-Range Tunnel.

PCIe SSD Host Controller

MLE offers NVMe Streamer which is a so-called Full Accelerator NVMe host controller integrated into FPGAs. NVMe Streamer offloads the NVMe protocol into programmable logic and enables to stream data from FPGA blocks in and out of directly-attached NVMe SSDs. You can find more details on NVMe Streamer here .

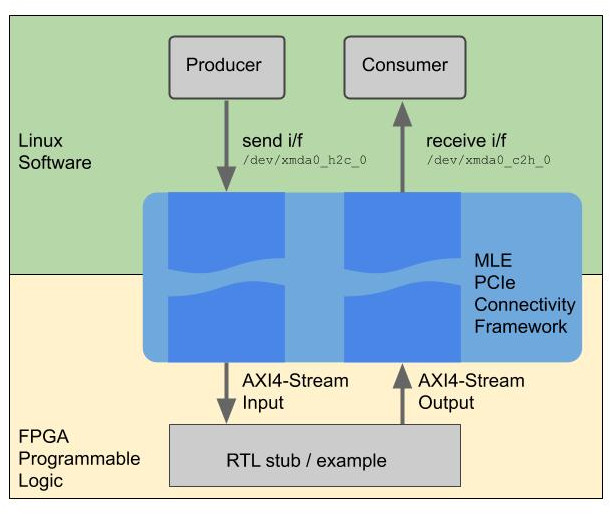

Linux PCIe Stream Framework for AMD/Xilinx FPGAs

PCIe as a hardware/software interface when combined with AXI4-Streaming suggests using a Streaming Dataflow architecture. This is also known as Producer / Consumer or FIFO-In / FIFO-Out and uses so-called Blocking Reads and Blocking Writes for interfaces. Because AXI4-Streaming implements back-pressure / flow-control, a Blocking Read waits until there is data to be received, and a Blocking Write waits with sending more data in case FIFOs are full.

Our FPGA PCIe Stream Framework for Xilinx FPGAs is a complete hardware/software subsystem comprising Linux device drivers (open source) and Xilinx PCIe function blocks, all delivered as a reference design (Xilinx Vivado project including all necessary TCL scripts, instantiating Xilinx catalogue IP, tested / synthesized on Xilinx Vivado and targeted to the Xilinx VCU118 Devkit).

Our Linux FPGA PCIe Stream Framework supports the Xilinx PCIe hard-IP for Xilinx 7-series family, or newer.

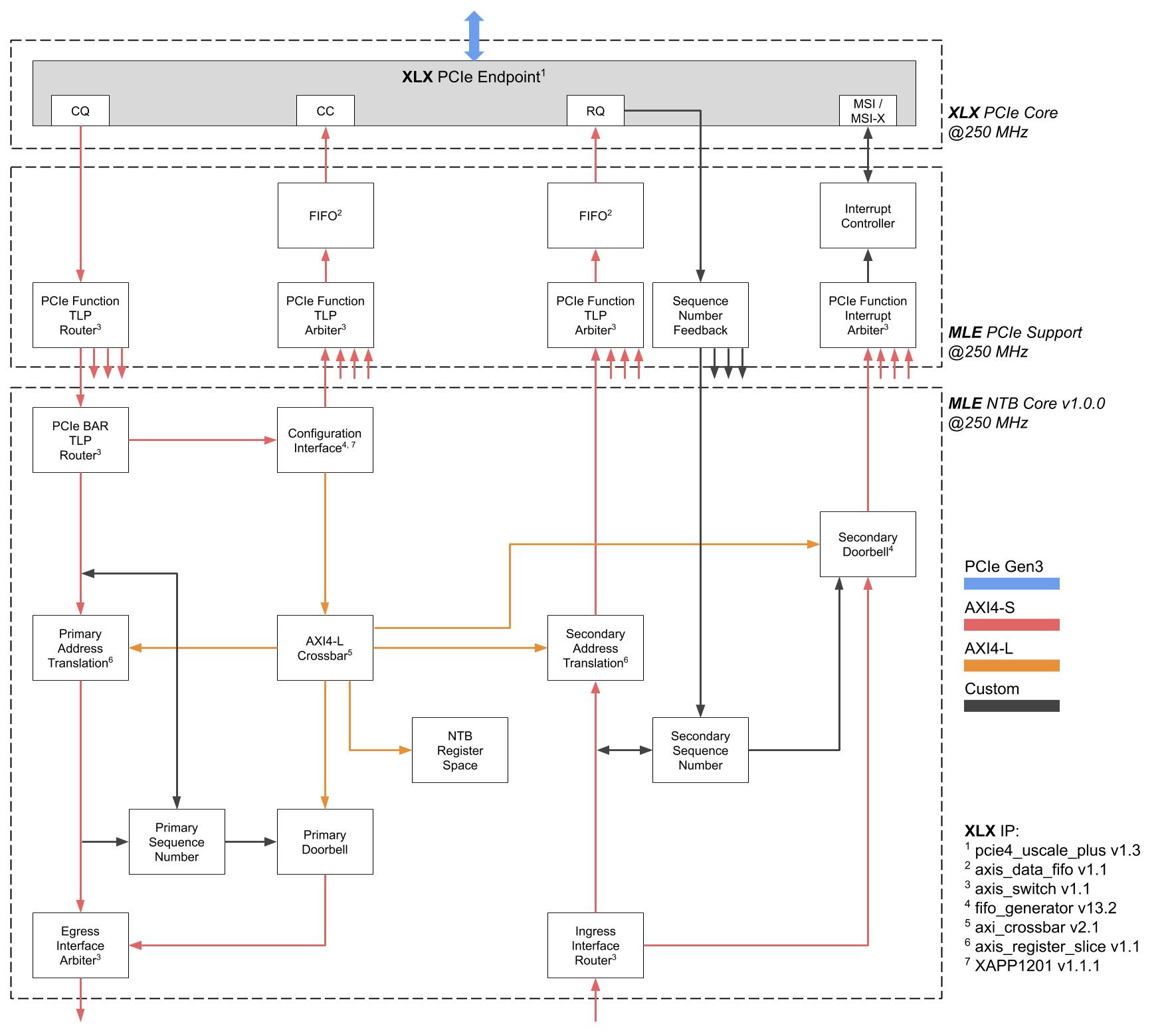

PCIe Non-Transparent Bridging (PCIe NTB)

PCIe NTB stands for PCI Express Non-Transparent Bridge. Unlike in a PCIe (transparent) Bridge where the RC “sees” all the PCIe busses all the way to all the Endpoints, an NTB forwards the PCIe traffic between the separate PCIe busses like a bridge. Each RC sees the NTB as an Endpoint device but does not see the other RC and devices on the other side. Means, everything behind the NTB is not directly visible to the particular RC, thus “Non-Transparent”.

MLE’s patented PCIe NTB technology is provided as a complete hardware/software subsystem comprising Linux drivers for NTB with a network device API plus readily instantiated PCIe Endpoint plus an on-chip AXI4-Stream switching fabric. It has been optimized for embedded applications such as automotive ECUs which typically are limited to maximum of 4 PCIe lanes. By borrowing performance techniques from NVM Express such as ring-buffers and posted-writes we can deliver a high-bandwidth, low-latency yet resource-efficient implementation.

The MLE Non Transparent Bridge supports the Xilinx PCIe hard-IP as well as soft-IP cores XpressRICH Controller IP for PCIe 3.1/3.0 (or newer) from PLDA or the Expresso Core from Northwest Logic / Rambus.

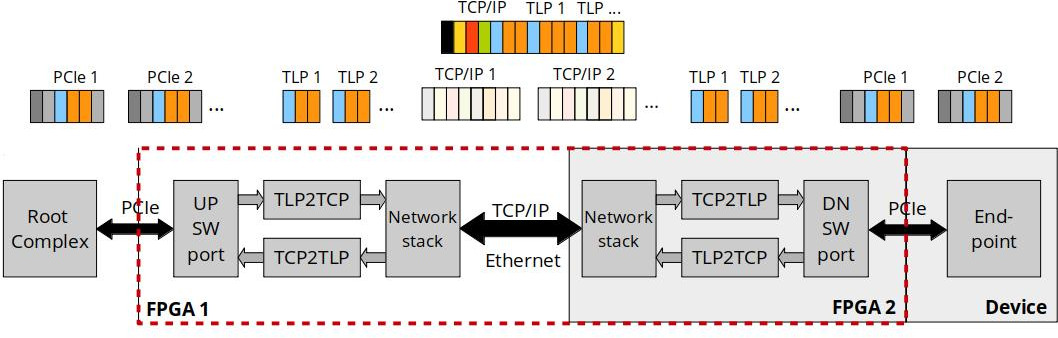

FPGA / ASIC PCIe Long-Range Tunnel

Based on a combination of network protocol acceleration technology from German Fraunhofer HHI and patented technology from MLE you can now extend the range of your PCIe connectivity well beyond the 12 inches supported by the standard and without the need to costly PCIe cables.

The fundamental concept behind is to transport PCIe Transaction Layer Packets (TLP) via TCP/IP, all implemented as a digital circuit in ASIC or FPGA. This guarantees reliable delivery and very deterministic delivery times. Optionally, this can be complemented by using real-time Ethernet such as AVB or TSN.

Our PCIe Long-Range Tunnel subsystem supports the Xilinx PCIe hard-IP as well as soft-IP cores XpressRICH Controller IP for PCIe 3.1/3.0 (or newer) from PLDA or the Expresso Core from Northwest Logic / Rambus.

Pricing of PCIe IP Cores

Our PCIe Connectivity solutions are available as a combination of PCIe IP (Intellectual Property) Cores, reference designs, and design integration services:

| Product Name | Deliverables | Example Pricing |

|---|---|---|

| Linux FPGA PCIe Stream Framework for Xilinx | Single-Project or Multi-Project Use; Xilinx FPGA only; reference design project delivered as encrypted netlist or RTL. |

starting at $17,000.- |

| NVMe Streamer | Complete, downloadable NVMe Host and Full Accelerator subsystem integrated into the ERD example system. Delivered as Vivado design project with encrypted RTL code. |

starting at $24,800.- |

| PCIe Non-Transparent Bridge (NTB) | Single-Project or Multi-Project Use; ASIC or FPGA; Modular and application-specific IP cores, and example design projects; delivered as encrypted netlists or RTL. |

starting at $52,000.- |

| PCIe Long-Range Tunnel | Single-Project or Multi-Project Use; ASIC or FPGA; Modular and application-specific IP cores, and example design projects; delivered as encrypted netlists or RTL. |

starting at $66,000.- |

| FPGA Application-specific R&D Services | Expert PCIe R&D services for Intel or Xilinx FPGA. | $1,880.- per engineering day (or fixed price project fee) |

Documentation

- Architecture and Performance of Integrated High-speed and Versatile Embedded Networking (Embedded World 2024)

- Zone-Based Automotive Backbones Tunneling PCIe® (PCI-SIG Developers Conference 2021)

- Sensor Fusion and Data-in-Motion Processing for Autonomous Vehicles (PCI-SIG Developers Conference 2019)

- PCIe Range Extension via Robust, Long-Reach Protocol Tunnels (PCI-SIG Developers Conference 2018)