TCP/UDP/IP Network Protocol Accelerator Platform (NPAP)

Accelerate Network Protocols with FPGA TCP/UDP/IP Stack

The German Fraunhofer Heinrich-Hertz-Institute (HHI) has partnered with MLE to market the proven network accelerators “TCP/IP & UDP Network Protocol Accelerator Platform (NPAP)”. This customizable solution enables high-bandwidth, low-latency communication solutions for FPGA- and ASIC-based systems for 1G / 2.5G / 5G / 10G / 25G / 40G / 50G / 100G Ethernet links.

MLE is a licensee of Fraunhofer HHI, and offers a range of technology services, sublicenses and business models compatible with customer’s ASIC or FPGA project settings, world-wide.

If you are interested in optimizing your Linux based system for best performance with NPAP, we do suggest to read this technical publication from Bruno Leitao, IBM: “Tuning 10Gb network cards on Linux“.

Core Benefits

- Accelerate CPUs by offloading TCP/UDP/IP processing into programmable logic (“Offloading”)

- Increase network throughput and reduce transport latency

- Bring full TCP/UDP/IP connectivity to FPGAs even if no CPU available (“Full Acceleration”)

- Complete and customizable turn-key solutions and IP cores based on the TCP/UDP/IP stack from the Fraunhofer HHI

- All MAC / Ethernet / IPv4 / UDP / TCP processing is implemented in HDL code, synthesizable to modern FPGAs and ASIC

- User applications can either be implemented in FPGA logic or in software via application-specific interfaces to CPUs

Key Features

- Highly modular TCP/UDP/IP stack implementation in synthesizable HDL

- Full line rate of 70 Gbps or more in FPGA, 100 Gbps or more in ASIC

- 128-bit wide bi-directional data paths with streaming interfaces

- Multiple, parallel TCP engines for scalable processing

- Network Interface Card functionality with Bypass (optional)

- DPDK Stream interface (optional)

- Corundum NIC integration with performance DMA and PCIe (optional)

Applications

- FPGA-based SmartNICs

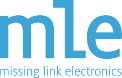

- In-Network Compute Acceleration (INCA)

- Hardware-only implementation of TCP/IP in FPGA

- PCIe Long Range Extension

- Networked storage, such as iSCSI

- Test & Measurement connectivity

- Automotive backbone connectivity based on open standards

- Video-over-IP for 3G / 6G / 12G transports

- Increase throughput for 10G/25G/50G/100G Ethernet

- Reduce latency in System-of-Systems communication

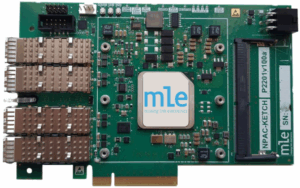

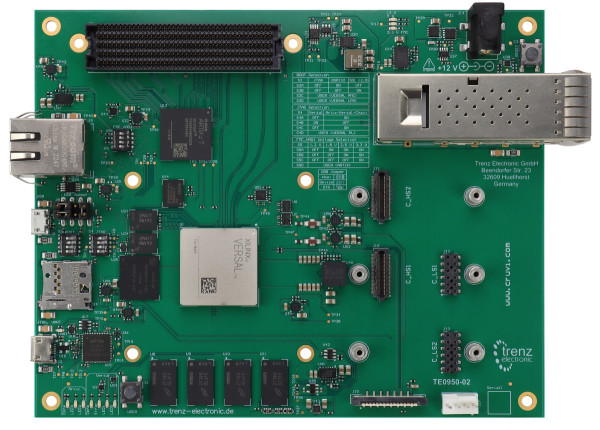

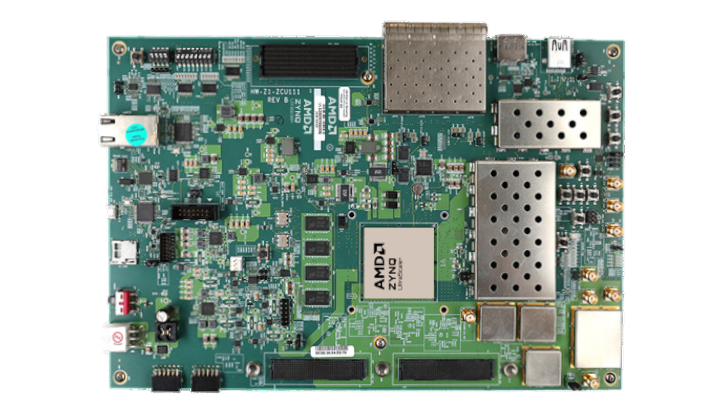

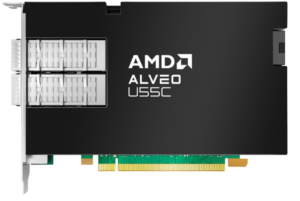

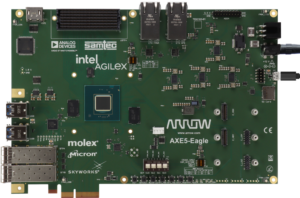

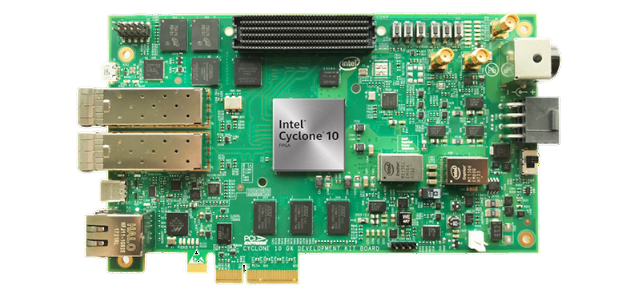

Evaluation Systems for TCP/IP & UDP Network Protocol Accelerator Platform (NPAP)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Pricing

MLE’s Network accelerators – Network Protocol Accelerator Platform (NPAP) – is available as a combination of Intellectual Property (IP) Cores, reference designs, and design integration services:

| Product Name | Deliverables | Example Pricing |

|---|---|---|

| Network Processing Device | Integrated processing device solution, built on top of leading FPGA technology, encapsulating one or more Network Protocol Accelerators for 1GbE and/or 10GbE. |

Based on NRE and unit volumeInquire |

| Intellectual Property (IP) Cores | Single-Project or Multi-Project Use; ASIC or FPGA; Modular and application-specific IP cores, and example design projects; delivered as encrypted netlists or RTL. | starting at $78,000.- (depends on FPGA device and line rate, please inquire) |

| Evaluation Reference Design (ERD) | Available upon request as FPGA design project, with optional customizations (different target device, different transceivers, etc) | free-of-charge |

| Application-specific R&D Services | Advanced network protocol acceleration R&D services with access to acceleration experts from Fraunhofer HHI and/or MLE. | $1,880.- per engineering day (or fixed price project fee) |

Documentation

- MLE Network Protocol Accelerator Platform (NPAP) Datasheet (updated November 2025) for

- IP Core and features description

- Architecture choices

- Latency analysis results

- FPGA resource numbers for AMD/Xilinx, Intel, Microsemi

- IETF RFC1122 protocols supported

- IP Core code changelog

- MLE Network Protocol Accelerator Platform (NPAP) Evaluation Guide

- Product Brochure – 10G/25G/50G/100G/200G/400G TCP / UDP / IP Solutions for AMD/Xilinx FPGAs

- Product Brochure – 10G/25G/50G/100G/200G/400G TCP / UDP / IP Solutions for Intel/Altera FPGAs

- Product Brochure – Network Protocol Accelerator Card – NPAC-40G

- Product Brochure – NPAP-100G for Xilinx Alveo Cards

NPAP relevant Product Guides are available under NDA:

- Product Guide – NPAP IP Core

- Product Guide – NPAP Control Application IP Core

- Product Guide – TCP Demo Application IP Core

- Product Guide – TCP Command Application IP Core

- Product Guide – UDP Demo Application IP Core

- Product Guide – Data Generator and Checker IP Core

- A TCP/IP Stack for High-Performance Chip-to-Chip

- Analyzing Network Impairment and Signal Integrity in High-Speed TCP/UDP/IP Ethernet Networks

- Myth-Busting Latency Numbers for TCP Offload Engines

- High-Speed Data Acquisition Systems

- Put a TCP/UDP/IP Turbo Into Your FPGA-SmartNIC

- Latency Measurement of 10G/25G/50G/100G TCP-Cores using RTL Simulation

- Deterministic Networking with TCP-TSN-Cores for 10/25/50/100 Gigabit Ethernet

About In-Network Compute Acceleration (INCA)

- Complementing TCP with Homa, Stanford’s Reliable, Rapid Request-Response Protocol

- TCP/IP for Real-Time Embedded Systems: The Good, the Bad and the Ugly

- CORUNDUM – From a NIC to a Platform for In-Network Compute

- Low-Latency Networking for Systems-of-Systems

- A 10 GbE TCP/IP Hardware Stack as part of a Protocol Acceleration Platform

- Low-Latency Solutions for Storage-Hungry Embedded Applications

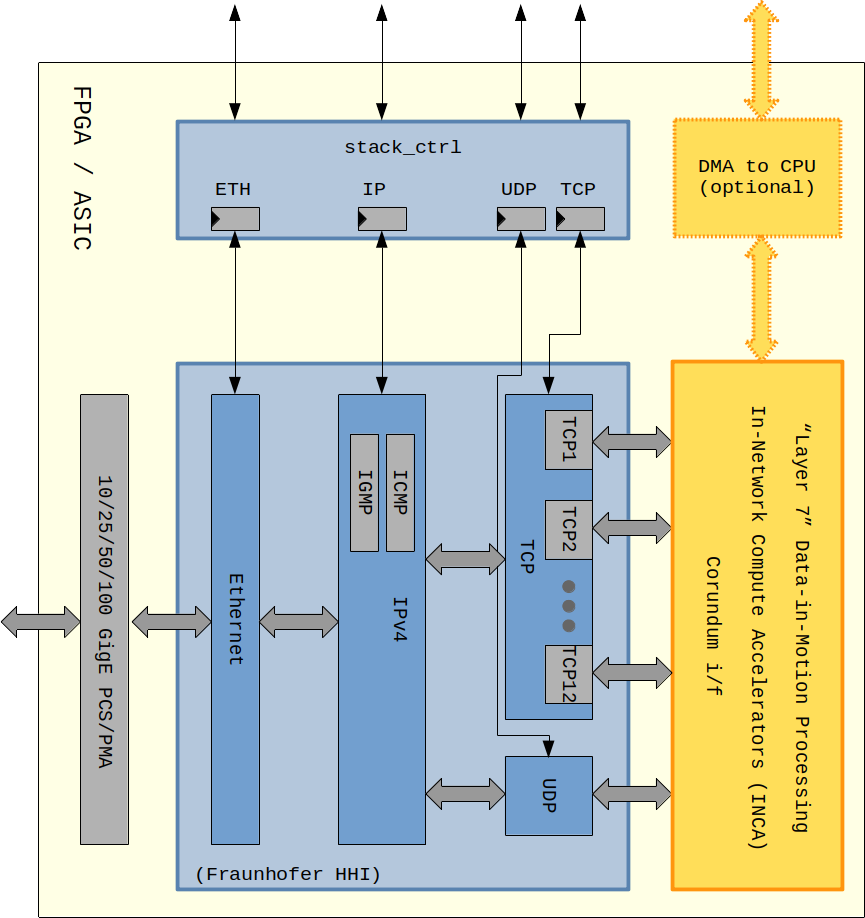

Encrypted Network Acceleration Solutions (ENAS)

TCP-TLS 1.3 for Secure 10/25/50 GigE

ENAS are joint solutions of MLE’s TCP/IP Network Protocol Accelerator Platform (NPAP) and Xiphera’s Transport Layer Security (TLS) 1.3 to ensure secure and reliable connection between devices over LAN and WAN. It implements Transport Layer Security, a cryptographic protocol that provides end-to-end data security, on top of the Transmission Control Protocol (TCP) layer.

Frequently Asked Questions

How compatible is Network Protocol Accelerator Platform (NPAP) with other NICs?

NPAP is integrated with the FPGA vendors PCS/PMA layer and thus is compatible with other IEEE compliant Ethernet Network Interface Cards (NIC) for 1 GigE, 10 GigE, 25 GigE, 40 GigE, 50 GigE, 100 GigE. Please refer to the FPGA device vendors documentation of the subsystem for further information.

How compatible is Network Protocol Accelerator Platform (NPAP) with other TCP/UDP/IP stacks?

NPAP implements all networking functions required by IETF RFC 1122 and thus is interoprable with software stacks from Microsoft Windows, Open-Source Linux (3.x or newer) as well as Mellanox/libvma or SolarFlare OpenOnload. Please refer to the NPAP Datasheet for more information.

Does Network Protocol Accelerator Platform (NPAP) use embedded Block-RAM (BRAM), and how much?

Yes, typically, we configure and instantiate NPAP with BRAMs for the Rx/Tx buffers. For applications where NPAP transmits data to a server we suggest 128K Bytes per TCP session (i.e. TCP port instance) to accomodate the (slower) processing of the software TCP stack running on the Recipient. Please refer to the NPAP Datasheet for more information.

What are typical TCP buffer sizes?

Here is the metric to determine TCP buffer sizes for NPAP (keep in mind, that TCP buffers are placed on both ends: Tx side and Rx side):

Buffer size (in bits) = Bandwidth (in bits-per-second) * RTT (in seconds)

RTT is the Round-Trip Time which is the time for the Sender to transmit the data plus the time-of-flight for the data, plus the time it takes the Recipient to check for packet correctness (CRC), plus the time for the Recipient to send out the ACK, plus the time-of-flight for the ACK, plus the time it takes the Sender to process the ACK and release the buffer.

For example:

- If the recipient is NPAP in a direct connection then we can assume ACK times less than 20 microseconds, i.e. buffer sizes shall be 200k bits. Means in this case a 32 kBytes on-chip BlockRAM per TCP session will be sufficient.

- If the recipient is software then RTT can be much longer, mostly due to the longer processing times in the OS on the recipient side. For a modern Linux we can assume RTT of 100 microseconds, or longer (see here [1] or run a ‘ping localhost’ on your machine). Means buffer sizes shall be around 1M bits, or the 128K Bytes of BRAM we typically instantiate.

Fraunhofer HHI

Founded in 1949, the German Fraunhofer-Gesellschaft undertakes applied research of direct utility to private and public enterprise and of wide benefit to society. With a workforce of over 23,000, the Fraunhofer-Gesellschaft is Europe’s biggest organization for applied research, and currently operates a total of 67 institutes and research units. The organization’s core task is to carry out research of practical utility in close cooperation with its customers from industry and the public sector.

Fraunhofer HHI was founded in 1928 as “Heinrich-Hertz-Institut für Schwingungsforschung“ and joined in 2003 the Fraunhofer-Gesellschaft as the “Fraunhofer Institute for Telecommunications, Heinrich-Hertz-Institut„. Today it is the leading research institute for networking and telecommunications technology, “Driving the Gigabit Society” .