NVMe Streamer

NVMe Streamer

NVMe IP Cores for Storage Acceleration

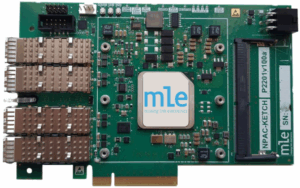

NVMe (Non-Volatile Memory Express) has become the prominent choice for connecting Solid-State Drives (SSD) when storage read/write bandwidth is key. Electrically, the NVMe protocol operates on top of PCIe; it leaves behind legacy protocols such as AHCI, and thus scales well for performance. MLE has been integrating PCIe, and NVMe, into FPGA-based systems for a while. Now, MLE releases NVMe Streamer, an IP Core for NVMe Streaming, which is a so-called Full Accelerator NVMe host subsystem integrated into FPGAs, and most prominently into Xilinx Zynq Ultrascale+ MPSoC and RFSoC devices.

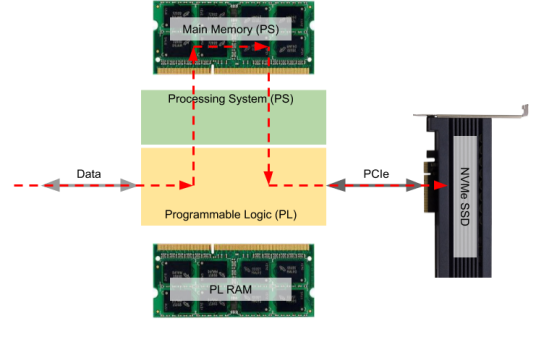

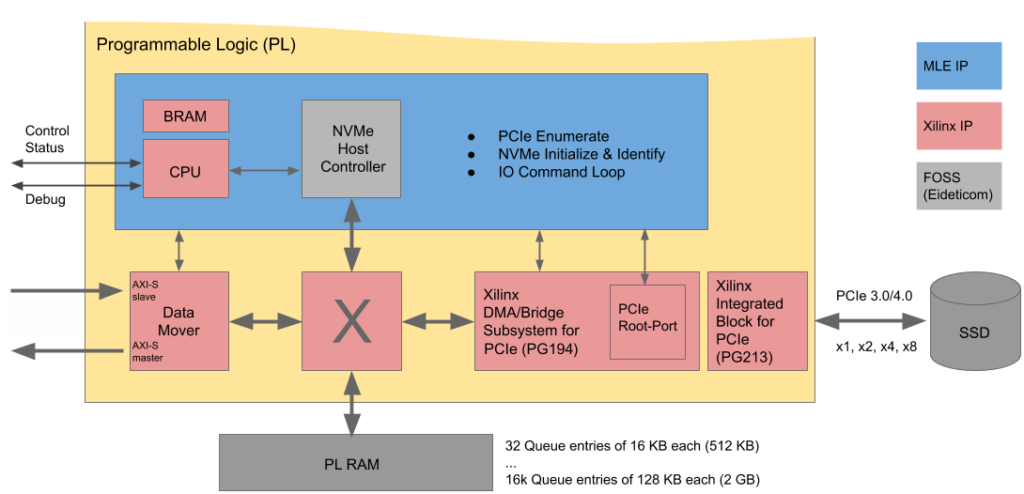

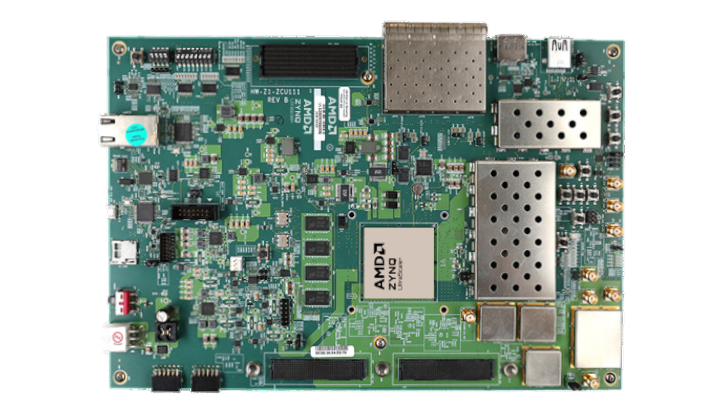

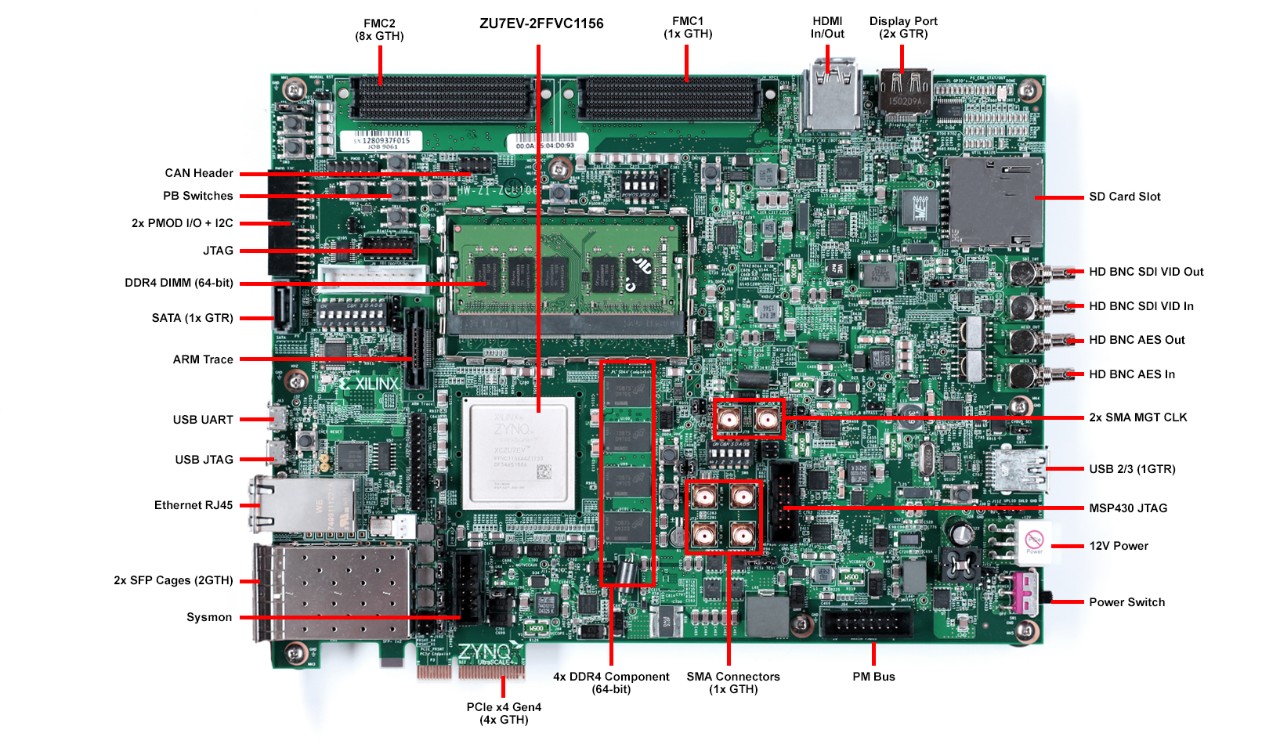

MLE’s new NVMe Streamer is the result of many successful customer projects and responds to the embedded market’s needs to make use of modern SSDs. NVMe Streamer is a fully integrated and pre-validated subsystem stack operating the NVMe protocol fully in Programmable Logic (PL) with no software running, keeping the Processing System (PS) out of this performance path. For Xilinx FPGAs, NVMe Streamer utilizes Xilinx GTH and GTY Multi-Gigabit Transceivers together with Xilinx PCIe Hard IP Cores for physical PCIe connectivity.

Key Features

- Provides one or more NVMe / PCIe host ports for NVMe SSD connectivity

- Full Acceleration means “CPU-less” operation

- Fully integrated and tested NVMe Host Controller IP Core

- PCIe Enumeration, NVMe Initialization & Identify, Queue Management

- Control & Status interface for IO commands and drive administration

- Approx. 50k LUTs and 170 BRAM tiles (for Xilinx UltraScale+)

- Compatible with PCIe Gen 1 (2.5 GT/sec), Gen 2 (5 GT/sec), Gen 3 (8 GT/sec), Gen 4 (16 GT/sec) speeds

- Scalable to PCIe x1, x2, x4, x8 lane

Applications

- High-speed analog and digital data acquisition

- Lossless and gapless recording of sensor data

- Automotive / Aerospace Data Logging

- Data streaming from SSDs

- Storage protocol offloading

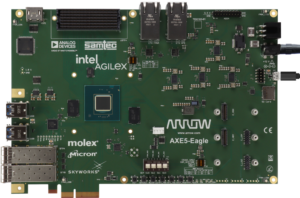

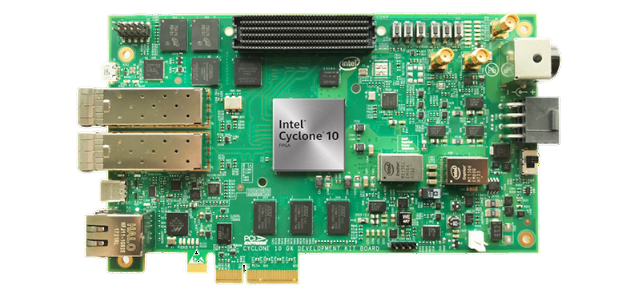

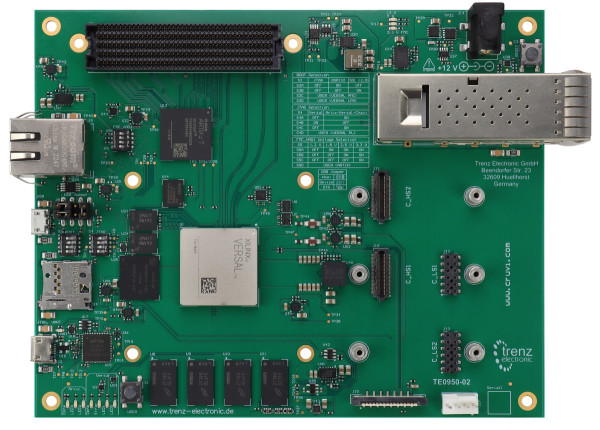

Evaluation System for NVMe Streamer (NVMe IP)

|

|

|

|

|

|

|

|

|

|

Accelerate Data Storage for M.2 SSDs

FPGA Drive FMC Gen4

- Enables direct connection of M.2 NVMe SSDs to FPGA boards

- Combine with MLE’s NVMe Streamer to achieve maximum M.2 SSD storage throughput

- Supports up to 4 lanes PCIe Gen 4 16 GT/s per SSD

- Connects to FPGA boards with FMC/FMC+ and MGTs

Accelerate Data Storage for U.2 SSDs

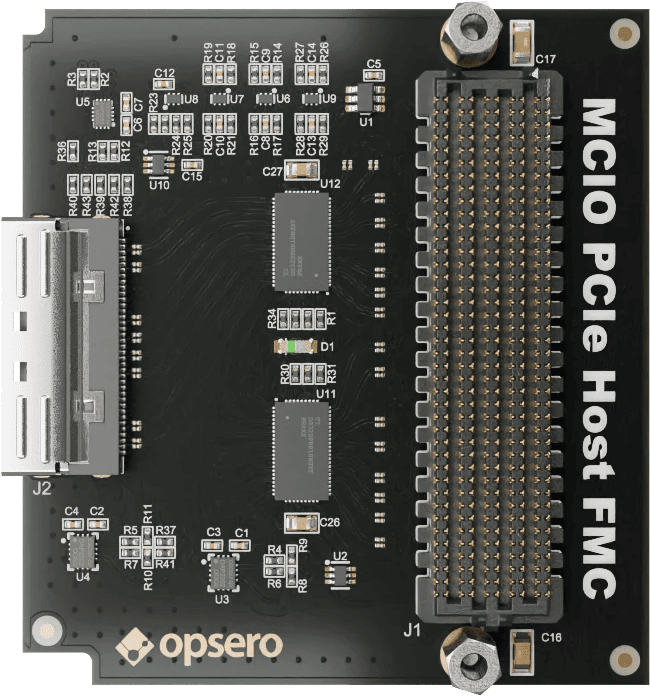

Opsero MCIO PCIe Host FMC

- Enables direct connection of U.2 NVMe SSDs to FPGA boards

- Combine with MLE’s NVMe Streamer to achieve maximum U.2 SSD storage throughput

- Supports MCIO PCIe x8 or dual PCIe x4 configurations

- Equipped with PCIe redrivers for enhanced signal integrity at 16 GT/s Gen 4 speeds

- Connects to FPGA boards with FMC/FMC+ and MGTs

Pricing

MLE’s license fee structure reflects the needs for simple and affordable NVMe IP Core for connectivity:

| Product Name | Deliverables | Example Pricing |

|---|---|---|

|

Evaluation Reference Design (NVMe Streamer ERD) |

Binary-only system stack compiled under Vivado Tried and tested to work on the AMD Versal™, UltraScale+™ MPSoC / RFSoC and Zynq™ 7000 SoC evaluation kits. Evaluation-only license, valid for 30 days. |

Free of charge |

|

Production Reference Design – Professional Edition (NVMe Streamer PRD-PE) |

Complete, downloadable NVMe Host and Full Accelerator subsystem integrated into the ERD example system. Delivered as Vivado design project with encrypted RTL code. Production-ready: Pre-integrated and tested to be portable to Your target system hardware. Fully paid-up for, royalty-free, world-wide, Single-Project-Use License, synthesizable for 1 year. Up to 40 hours of premium support, customization and/or integration design services via email, phone or online collaboration. |

Starting at $24,800.- |

|

Application / Project specific Expert Design Services |

System-level design, modeling, implementation and test for realizing Domain-Specific NVMe Streaming / Recording Architeture. |

$1,880.- per engineering day (or fixed price project fee) |

Documentation

- NVMe Streamer Datasheet & Product Guide (available under NDA)

- IP Core and features description

- Architecture choices

- Performance analysis

- FPGA resource numbers for AMD/Xilinx

- IP Core code changelog

- Linux ZynqMP PS-PCIe Root Port Driver (A software-only, non-accelerated alternative described by the Xilinx Wiki)

- Example designs on GitHub from Opsero (for PS-based NVMe supporting various FPGA and MPSoC evaluation boards)

Frequently Asked Questions

Does the NVMe IP core, NVMe Streamer, support file systems?

No, NVMe Streamer is so-called Block Storage. So, no file systems are not supported. For each data transfer the user application logic selects a start and maximum end address, and then data is written to flash in a linear fashion. This achieves best performance and avoids write amplifications.

Does NVMe Streamer support drive partitions?

Partitions are not explicitly supported. However, the user application logic can use NVMe Streamer to read the SSD’s partition table and then set up transfers with start and maximum end address to be aligned to partitions.

Does "NVMe Streamer" support NVMe namespaces?

Only one single namespace is supported.

How many SSDs can be connected to NVMe Streamer?

The standard for NVMe Streamer is to be directly connected to one single NVMe SSD where the FPGA acts as a so-called PCIe Root Complex and the SSD acts as the so-called PCIe Endpoint. However, we can customize NVMe Streamer for your application to support more complex PCIe topologies, including multiple direct-attached SSDs, multiple SSDs connected via a 3rd party PCIe switch chip, or even PCIe Peer-to-Peer. Please ask us for more details.

How many NVMe IO Queues does NVMe Streamer support and what is the depth of the NVMe IO Queue?

NVMe Streamer currently supports one single IO Queue. This IO Queue can have up to 128 entries, each with up to 128 KiB data. I.e. you can have up to 16 MiB of “data in flight”. If needed, we can change the depth and size of this IO Queue. However, given the needs of streaming applications increasing the number of IO Queues may not be advantageous.

Does NVMe Streamer support PCIe Peer-to-Peer?

Yes, this is supported in a customized configuration. Peer-to-Peer transfers can be very attractive as it frees up the host CPU. Team MLE can customize NVMe Streamer for your application to support many more complex PCIe topologies, including multiple direct-attached SSDs, multiple SSDs connected via a 3rd party PCIe switch chip, including PCIe Peer-to-Peer. Please ask us for more details.

What are the user interfaces of the "NVMe Streamer" IP core?

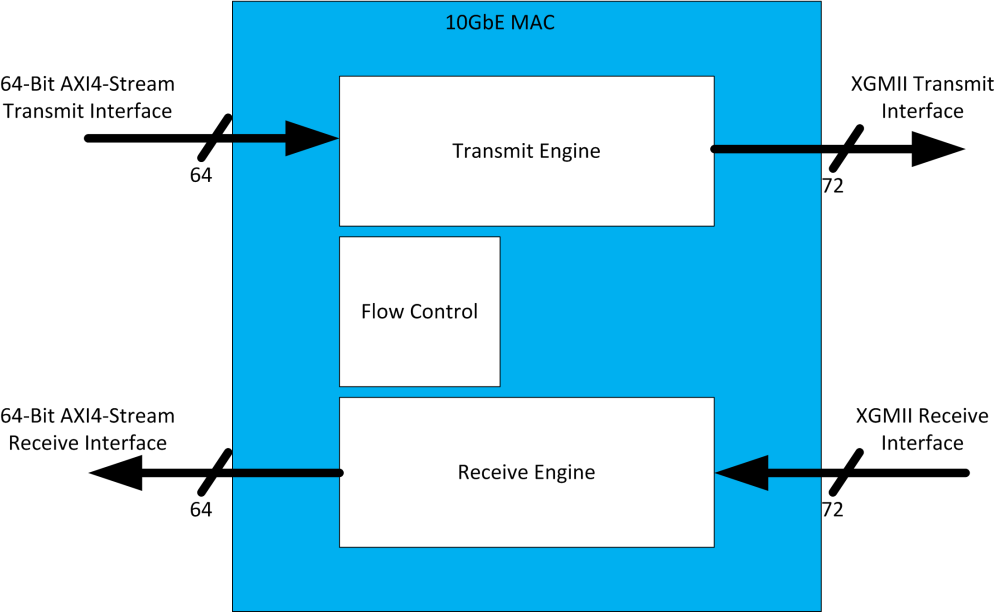

NVMe Streamer can be configured via an AXI4-Lite register space. This register space is also used to set up and control streaming transfers. The actual data exchange then is handled via an AXI4-Stream master and slave. Some GPIO style status signals for informational purposes, like LEDs, are provided as well. This is documented in our developers guide.

How many parallel streams can be processed?

Currently, only one single stream of data is supported by NVMe Streamer. Therefore, it is the designer’s responsibility to buffer additional streams and provide said streams to NVMe Streamer once the active stream is finished. An alternative can be to multiplex streams while writing to flash. The latter works well, for example, for multiple ADC inputs with same sample rate and width.

Does NVMe Streamer support M.2 PCIe connectivity?

Yes. Because NVMe Streamer is agnostic to the formfactor of your SSD M.2, U.2, EDSFF and so on are supported, as long as your SSD “speaks the NVMe protocol” and not SATA nor SAS.

What are the best SSDs to use and from which vendor?

While, again, NVMe Streamer is compatible to work with any NVMe SSD, there are a couple of other aspects to keep in mind when selecting an NVMe SSD: Noise, vibration, harshness, temperature throttling, local RAM buffers, SLC, MLC, TLC, QLC, 3D-XPoint, etc. To enable our customers to deliver dependable performance solutions, we have worked with a set of 3rd party SSD vendors and would be happy to give you technical guidance in your project. Please inquire.