Network Function Accelerators, FACs, NICs and SmartNICs

NICs, SmartNICs, and Function Accelerator Cards with Network Accelerator

A Network Interface Card (NIC) is a component that connects computers via networks, these days mostly via IEEE Ethernet – but what makes a NIC a SmartNIC? How can FPGA Network Accelerator make it operate more efficiently and enhance its performance to deliver deterministic networking?

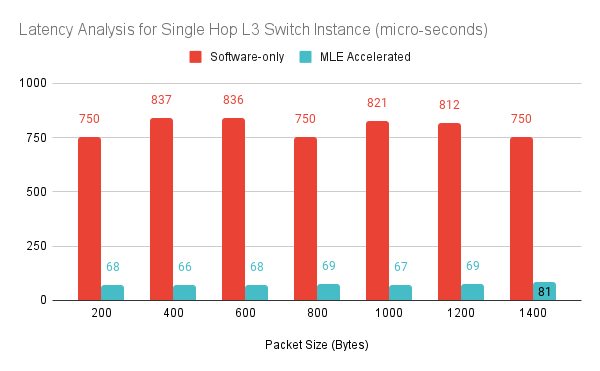

With the push for Software-Defined Networking, (mostly open source) software running on standard server CPUs became a more flexible and cost-effective alternative to custom networking silicon and appliances. However, in the post Dennard scaling area, server CPU performance improvements cannot keep up with increasing computational demand of faster network port speeds.

With the push for Software-Defined Networking, (mostly open source) software running on standard server CPUs became a more flexible and cost-effective alternative to custom networking silicon and appliances. However, in the post Dennard scaling area, server CPU performance improvements cannot keep up with increasing computational demand of faster network port speeds.

This widening performance gap creates the need for so-called SmartNICs. SmartNIC not only implement Domain-Specific Architecture for network processing but also offload host CPUs from running portions of the network processing stack and, thereby, free up CPU cores to run the “real” application.

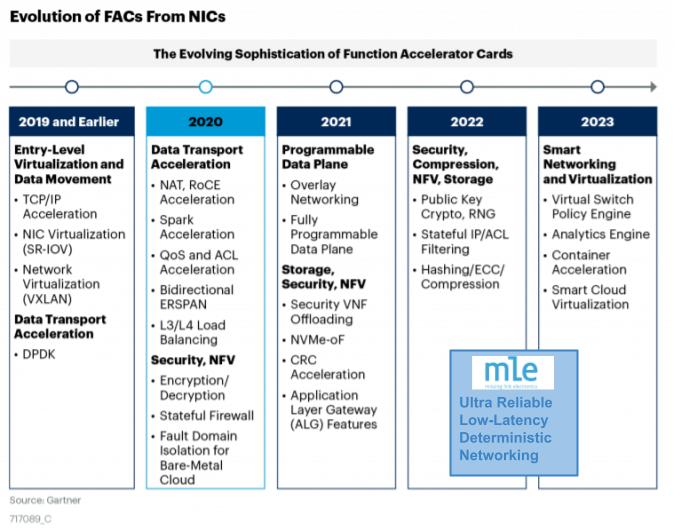

According to Gartner, Function Accelerator Cards (FACs) incorporate functions on the NIC that would have been done on dedicated network appliances. Hence, all FACs are essentially NICs, but not all NICs/SmartNICs are FACs. When deployed properly, FACs can increase bandwidth performance, can reduce transport latencies and can improve compute efficiency, which translates to less energy consumption.

Features of FPGA Network Accelerator

Ultra-Reliable, Low-Latency, Deterministic Networking

With ultra-reliable, low-latency, deterministic networking we have borrowed a concept from 5G wireless communication (5G URLLC) and have applied this to LAN (Local Area Network) and WAN (Wide Area Network) wired communication:

- Ultra-Reliable means no packets get lost in transport

- Low-Latency means that packets get processed by a FAC at a fraction of CPU processing times

- Deterministic means that there is an upper bound for transport and for processing latency

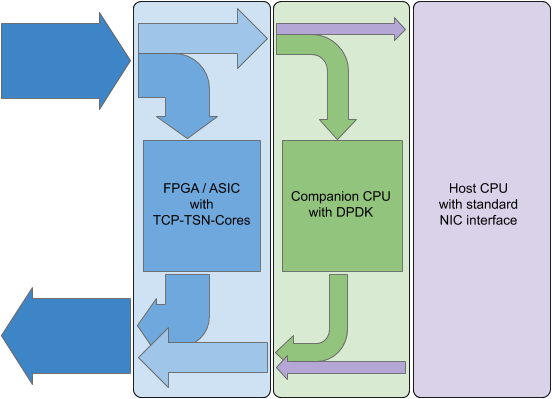

We do this by combining the TCP protocol, fully accelerated (in FPGA or ASIC using NPAP), with TSN (Time Sensitive Networking) optimized for stream processing at data rates of 10/25/50/100 Gbps. These so-called TCP-TSN-Cores, the FPGA network accelerator, not only give us precise time synchronization but also traffic shaping, traffic scheduling and stream reservation with priorities.

We believe that FPGAs are very well positioned as programmable compute engines for network processing because FPGAs can implement “stream processing” more efficiently than CPUs or GPUs can do. In particular, when the networking data stays local to the FPGA fabric Data-in-Motion processing can be done within 100s of clock cycles (which is 100s of nano-seconds) and can be sent back a few 100 clock cycles later, an aspect with is referred to as Full-Accelerated In-Network Compute.

While FPGA technology has been on the forefront of Moore’s Law and modern devices such as AMD/Xilinx Versal Prime or Intel Agilex or Achronix Speedster7t can hold millions of gates, FPGA processing resources must be used wisely, when Bill-of-Materials costs are important. Therefore, at MLE we have put together a unique combination of FPGA and open-source software to achieve best-in-class performance while addressing cost metrics more in-line with CPU-based SmartNICs.

Unique and Cost-Efficient Combination of Open Source

The Open Source Technologies We Borrow From

Meanwhile highly optimized for networking

An open source multi-layer network switch

An open source High-Level Synthesis engine

The GitHub project focusing on AMD/Xilinx Alveo cards

The High-Level Synthesis Frontend for Xilinx FPGAs

High-Level Synthesis plays a vital role in our implementation as it allows MLE and MLE customers to turn algorithms implemented in C/C++/SystemC into efficient FPGA logic which is portable between different FPGA vendors.

To build a high-performance FAC platform, portions of the above have been integrated together with proven 3rd party networking technologies:

- NPAP, the Network Protocol Accelerator Platform which is a TCP/UDP/IP Full Accelerator that comes from Fraunhofer HHI

- TSN, which is Time Sensitive Networking, a collection of IEEE Standards implemented by Fraunhofer IPMS

Corundum In-Network Compute + TCP Full Accelerator

Corundum is an open-source FPGA-based NIC which features a high-performance datapath between multiple 10/25/50/100 Gigabit Ethernet ports and the PCIe link to the host CPU. Corundum has several unique architectural features: For example, transmit, receive, completion, and event queue states are stored efficiently in block RAM or ultra RAM, enabling support for thousands of individually-controllable queues.

MLE is a contributor to the Corundum project. Please visit our Developer Zone for services and downloads for Corundum full system stacks pre-built for various in-house and off-the-shelf FPGA boards.

MLE is a contributor to the Corundum project. Please visit our Developer Zone for services and downloads for Corundum full system stacks pre-built for various in-house and off-the-shelf FPGA boards.

MLE combines the Corundum NIC with NPAP, the TCP/UDP/IP Full Accelerator from Fraunhofer HHI, via a so-called TCP Bypass which minimizes processing latency of network packets: Each packet gets processed in parallel by the Corundum NIC and by NPAP. The moment it can be determined that the packet shall be handled by NPAP (based on IP address and port number) this packet gets invalidated inside the Corundum NIC. If a packet shall not be processed by NPAP, it get’s dropped in NPAP and will solely be processed by the Corundum NIC.

Fundamentally, this implements network protocol processing in multiple stages: Network data which is latency sensitive does get processed using full acceleration, while all other network traffic is handled either by a companion CPU and/or by the host CPU.

Applications of FPGA Network Accelerator

MLE’s Network Accelerators are of particular value where network bandwidth and latency constraints are key:

- Wired and Wireless Networking

- Acceleration of Software-Defined Wide Area Networks (SD-WAN)

- Video Conferencing

- Online Gaming

- Industrial Internet-of-Things (IIoT)

- Handling of Application Oriented Network Services

- Mobile 5G User-Plane Function Acceleration

- Mobile 5G URLLC Core Network Processing with TSN

- Offloading OpenvSwitch (OvS), vRouter, etc

Key Benefits

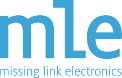

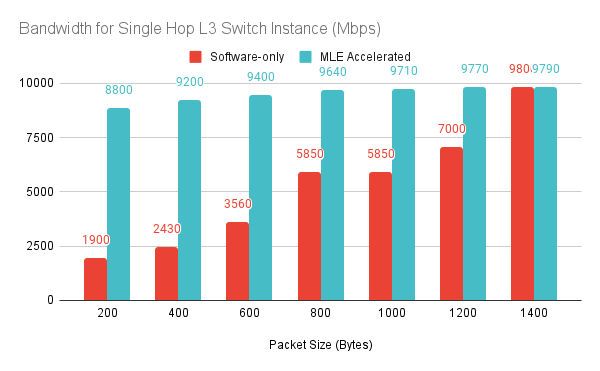

The following shows the key benefits of MLE’s technology by comparing open-source SD-WAN switching in native CPU software mode against MLE’s FPGA Network Accelerator:

Compared with plain CPU software processing MLE’s Ultra-Reliable Low-Latency Deterministic Networking increases network bandwidth and throughput close to Ethernet line rates, in particular for smaller packets, which reduces the need for over-provisioning within the backbone. And, processing latencies can be shortened significantly which is important, for example, when delivering a lively audio/video conferencing experience over WAN.

Availability

MLE’s FPGA Network Accelerator is available as a licensable full system stack and delivered as an integrated hardware/firmware/software solution. In close collaboration with partners in the FPGA ecosystem, MLE has ported and tested variations of the stack on a growing list of FPGA cards. Currently, this list comprises high-performance 3rd party hardware as well as MLE-designed cost-optimized hardware:

FPGA Card | Hardware Description & Features | Status |

| NPAC-Ketch, MLE-designed single-slot FHHL PCIe card

| Available Inquire |

| Alveo U280, AMD/Xilinx-designed dual-slot FHFL PCIe card

| Early Access |

| N6000-PL, Intel-designed single-slot FHHL PCIe card

| Early Access |

Documentation

- Ultra-Reliable, Low-Latency, Deterministic Networking

- Function Accelerator Card – NPAC-40G